— by Philipp Renoth

Introduction to cRAiG

In this post we’ll walk through a RAG evaluation of cRAiG’s retrieval‑augmented pipeline.

cRAiG — ConSol Retrieval Augmented Intelligent Generation — is our flagship solution for building privacy‑first Retrieval‑Augmented Generation (RAG) pipelines that comply with EU data‑protection standards. cRAiG exposes an OpenAI‑compatible API, letting you plug proprietary knowledge bases into modern LLM‑driven applications while retaining full control over inference.

Evaluation‑Driven Development

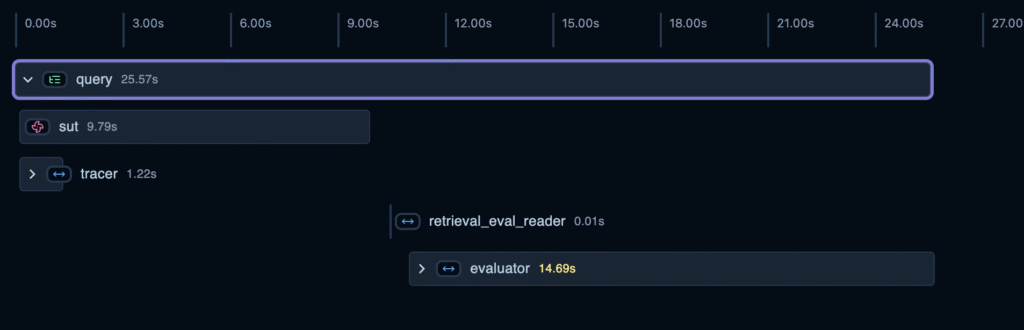

Screenshot of Langfuse timeline: system under test (sut) is queried, then the RAG evaluation runs

Screenshot of Langfuse timeline: system under test (sut) is queried, then the RAG evaluation runsAny change to a cRAiG pipeline—whether it’s a new connector, a model swap, or a tuning tweak—is gated by our RAG evaluation results.. If the metrics show no clear improvement, we keep the modification on the shelf. This discipline ensures that we only ship updates that demonstrably increase reliability, relevance, and compliance.

Ground‑Truth Data Model

Ground‑truth data represents the definitive reference against which the RAG pipeline’s output is measured. It captures the exact question a user would ask and the verified answer that the system should strive to reproduce, enabling objective assessment of relevance, precision, and recall.

Ground-Truth Test-Set

Each RAG evaluation record contains:

- user_input: the question posed to the RAG pipeline.

- reference: the expected answer produced by a trusted source.

Generated RAG Evaluation-Set

During execution we capture:

- response: the actual answer returned by the system.

- retrieved_context: the list of document chunks fed into the generation step.

RAG evaluation Metrics – our best practice

We evaluate our RAG pipelines with ragas because it offers an easy‑to‑use, metric‑rich framework that integrates seamlessly with Haystack, enabling quick, reproducible assessments without additional plumbing.

Next, we’ll present a compact overview of the four metrics—setting the stage for a deeper, example‑driven exploration later in the post.

- Answer Correctness: We use the default weight factual similarity (75 %) and semantic similarity (25 %). A low score indicates missing or unrelated aspects, while a high score shows strong alignment with the reference. This metric alone does not guarantee that the answer originates from the retrieved context.

- Context Recall: A high score confirms that the pipeline relied on the intended documents; a low score flags possible hallucinations even when Answer Correctness is high.

- Answer Relevancy: Low values reveal tangential or off‑topic content that can confuse end users.

- Faithfulness: Low values signal missing evidence and reliance on the model’s internal knowledge rather than the supplied documents.

Step by step RAG evaluation

At ConSol we’ve leveraged extensive RAG research on our CM platform, focusing on manuals and internal wiki pages. Through close collaboration with colleagues we established a robust ground‑truth methodology that underpins our RAG evaluation framework.

For the following RAG evaluation steps we will use the same ground truth item:

- user_input: Which protocols are supported for fetching emails?

- reference: ConSol CM supports the following protocols for fetching emails: IMAP, IMAPs, POP3, and POP3s.

A good pipeline setup may produce a response like this:

- response: ConSol CM can retrieve mail using the standard mailbox protocols:

– **IMAP** (and the secure variant **IMAPS**)

– **POP3** (and the secure variant **POP3S**)These four protocols are the ones supported for fetching emails 【<link removed>】

Some metrics are combined metrics which derive its values from a set of underlying measurements; these base metrics often involve distinct operations such as structured extraction from natural language, classification of statements, or the generation of text embeddings, which can overlap in scope. We will go into enough details about what is done here, but only once at the first occurrence.

All scores are rounded to two decimal places.

1. Answer Correctness

Answer correctness is calculated as a weighted combination—75 % factual similarity and 25 % semantic similarity—so that the metric reflects how well the generated answer covers every element of the ground‑truth reference. In practice, ensuring that all aspects of the reference are addressed carries far more weight than achieving a high semantic similarity score.

1.1 Factual Similarity

We run three distinct LLM invocations to assess how closely the generated response matches the reference.

- Reference extraction: The first invocation processes

user_inputtogether with thereferencetext and returns the set of factual statements present in the reference. - Response extraction: The second invocation analyzes the same

user_inputpaired with the model’sresponse, extracting the statements the answer actually makes. - Classification: A third invocation classifies every extracted statement into one of three categories:

- TP (True Positive): Statement appears in the answer and is directly supported by at least one reference statement.

- FP (False Positive): Statement appears in the answer but has no direct support in the reference.

- FN (False Negative): Statement is present in the reference but missing from the answer.

From these counts a factual similarity score is calculated which is the ratio of the True Positives in the sum of all statements.

Factual Similarity – reference Extraction Prompt

Given a question and an answer, analyze the complexity of each sentence in the answer. Break down each sentence into one or more fully understandable statements. Ensure that no pronouns are used in any statement. Format the outputs in JSON.

Please return the output in a JSON format that complies with the following schema as specified in JSON Schema:

{"properties": {"statements": {"description": "The generated statements", "items": {"type": "string"}, "title": "Statements", "type": "array"}}, "required": ["statements"], "title": "StatementGeneratorOutput", "type": "object"}Do not use single quotes in your response but double quotes,properly escaped with a backslash.

--------EXAMPLES-----------

Example 1

Input: {

"question": "Who was Albert Einstein and what is he best known for?",

"answer": "He was a German-born theoretical physicist, widely acknowledged to be one of the greatest and most influential physicists of all time. He was best known for developing the theory of relativity, he also made important contributions to the development of the theory of quantum mechanics."

}

Output: {

"statements": [

"Albert Einstein was a German-born theoretical physicist.",

"Albert Einstein is recognized as one of the greatest and most influential physicists of all time.",

"Albert Einstein was best known for developing the theory of relativity.",

"Albert Einstein also made important contributions to the development of the theory of quantum mechanics."

]

}

-----------------------------

Now perform the same with the following input

input: {

"question": "Which protocols are supported for fetching emails?",

"answer": "ConSol CM supports the following protocols for fetching emails: IMAP, IMAPs, POP3, and POP3s.\n"

}

Output:

The evaluation LLM sees five statements for the ground‑truth reference.

Factual Similarity – reference Extraction Output

{

"statements": [

"ConSol CM supports protocols for fetching emails.",

"The supported protocols for fetching emails include IMAP.",

"The supported protocols for fetching emails include IMAPs.",

"The supported protocols for fetching emails include POP3.",

"The supported protocols for fetching emails include POP3s."

]

}

The same extraction step is performed for the response.

Factual Similarity – response Extraction Prompt

Given a question and an answer, ...

-----------------------------

Now perform the same with the following input

input: {

"question": "Which protocols are supported for fetching emails?",

"answer": "ConSol CM can retrieve mail using the standard mailbox protocols:\n\n- **IMAP** (and the secure variant **IMAPS**) \n- **POP3** (and the secure variant **POP3S**)\n\nThese four protocols are the ones supported for fetching emails 【<link removed>】."

}

Output:

This time it sees 4 statements for the response.

Factual Similarity – response Extraction Output

{

"statements": [

"ConSol CM can retrieve mail using standard mailbox protocols.",

"The supported protocols for fetching emails include IMAP and the secure variant IMAPS.",

"The supported protocols for fetching emails also include POP3 and the secure variant POP3S.",

"These four protocols are the ones supported for fetching emails."

]

}

Finally the set of statements need to be classified into the three categories.

Factual Similarity – Classification Prompt

Given a ground truth and an answer statements, analyze each statement and classify them in one of the following categories: TP (true positive): statements that are present in answer that are also directly supported by the one or more statements in ground truth, FP (false positive): statements present in the answer but not directly supported by any statement in ground truth, FN (false negative): statements found in the ground truth but not present in answer. Each statement can only belong to one of the categories. Provide a reason for each classification.

Please return the output in a JSON format that complies with the following schema as specified in JSON Schema:

{"$defs": {"StatementsWithReason": {"properties": {"statement": {"title": "Statement", "type": "string"}, "reason": {"title": "Reason", "type": "string"}}, "required": ["statement", "reason"], "title": "StatementsWithReason", "type": "object"}}, "properties": {"TP": {"items": {"$ref": "#/$defs/StatementsWithReason"}, "title": "Tp", "type": "array"}, "FP": {"items": {"$ref": "#/$defs/StatementsWithReason"}, "title": "Fp", "type": "array"}, "FN": {"items": {"$ref": "#/$defs/StatementsWithReason"}, "title": "Fn", "type": "array"}}, "required": ["TP", "FP", "FN"], "title": "ClassificationWithReason", "type": "object"}Do not use single quotes in your response but double quotes,properly escaped with a backslash.

--------EXAMPLES-----------

Example 1

Input: {

"question": "What powers the sun and what is its primary function?",

"answer": [

"The sun is powered by nuclear fission, similar to nuclear reactors on Earth.",

"The primary function of the sun is to provide light to the solar system."

],

"ground_truth": [

"The sun is powered by nuclear fusion, where hydrogen atoms fuse to form helium.",

"This fusion process in the sun's core releases a tremendous amount of energy.",

"The energy from the sun provides heat and light, which are essential for life on Earth.",

"The sun's light plays a critical role in Earth's climate system.",

"Sunlight helps to drive the weather and ocean currents."

]

}

Output: {

"TP": [

{

"statement": "The primary function of the sun is to provide light to the solar system.",

"reason": "This statement is somewhat supported by the ground truth mentioning the sun providing light and its roles, though it focuses more broadly on the sun's energy."

}

],

"FP": [

{

"statement": "The sun is powered by nuclear fission, similar to nuclear reactors on Earth.",

"reason": "This statement is incorrect and contradicts the ground truth which states that the sun is powered by nuclear fusion."

}

],

"FN": [

{

"statement": "The sun is powered by nuclear fusion, where hydrogen atoms fuse to form helium.",

"reason": "This accurate description of the sun’s power source is not included in the answer."

},

{

"statement": "This fusion process in the sun's core releases a tremendous amount of energy.",

"reason": "This process and its significance are not mentioned in the answer."

},

{

"statement": "The energy from the sun provides heat and light, which are essential for life on Earth.",

"reason": "The answer only mentions light, omitting the essential aspects of heat and its necessity for life, which the ground truth covers."

},

{

"statement": "The sun's light plays a critical role in Earth's climate system.",

"reason": "This broader impact of the sun’s light on Earth's climate system is not addressed in the answer."

},

{

"statement": "Sunlight helps to drive the weather and ocean currents.",

"reason": "The effect of sunlight on weather patterns and ocean currents is omitted in the answer."

}

]

}

Example 2

Input: {

"question": "What is the boiling point of water?",

"answer": [

"The boiling point of water is 100 degrees Celsius at sea level"

],

"ground_truth": [

"The boiling point of water is 100 degrees Celsius (212 degrees Fahrenheit) at sea level.",

"The boiling point of water can change with altitude."

]

}

Output: {

"TP": [

{

"statement": "The boiling point of water is 100 degrees Celsius at sea level",

"reason": "This statement is directly supported by the ground truth which specifies the boiling point of water as 100 degrees Celsius at sea level."

}

],

"FP": [],

"FN": [

{

"statement": "The boiling point of water can change with altitude.",

"reason": "This additional information about how the boiling point of water can vary with altitude is not mentioned in the answer."

}

]

}

-----------------------------

Now perform the same with the following input

input: {

"question": "Which protocols are supported for fetching emails?",

"answer": [

"ConSol CM can retrieve mail using standard mailbox protocols.",

"The supported protocols for fetching emails include IMAP and the secure variant IMAPS.",

"The supported protocols for fetching emails also include POP3 and the secure variant POP3S.",

"These four protocols are the ones supported for fetching emails."

],

"ground_truth": [

"ConSol CM supports protocols for fetching emails.",

"The supported protocols for fetching emails include IMAP.",

"The supported protocols for fetching emails include IMAPs.",

"The supported protocols for fetching emails include POP3.",

"The supported protocols for fetching emails include POP3s."

]

}

Output:

Even the statement sets have different counts, the evaluation LLM understands that the statements of the ground truth reference are fully present in response.

Factual Similarity – Classification Output

{

"TP": [

{

"statement": "ConSol CM can retrieve mail using standard mailbox protocols.",

"reason": "This statement is supported by the ground truth which mentions that ConSol CM supports protocols for fetching emails."

},

{

"statement": "The supported protocols for fetching emails include IMAP and the secure variant IMAPS.",

"reason": "This statement is supported by the ground truth which lists IMAP and IMAPs as supported protocols."

},

{

"statement": "The supported protocols for fetching emails also include POP3 and the secure variant POP3S.",

"reason": "This statement is supported by the ground truth which lists POP3 and POP3s as supported protocols."

},

{

"statement": "These four protocols are the ones supported for fetching emails.",

"reason": "This statement summarizes the supported protocols mentioned in the answer, which are all present in the ground truth."

}

],

"FP": [],

"FN": []

}

This represents a perfect score of 1.00. Finally, we have to compute the semantic similarity.

Semantic Similarity

We embed the reference and response texts, calculate their cosine similarity, and treat the resulting value as the metric. Here we got a score of 0.90

Answer Correctness – aggregation

Finally we combine the two similarity metrics:

- Factual similarity:

1.00. - Semantic similarity:

0.90.

The aggregated correctness score is a weighted sum (75 % factual + 25 % semantic): 75% * 1.00 + 25% * 0.90 = 0.98

The resulting 0.98 reflects a high level of both factual fidelity and semantic relevance, indicating that the generated answer aligns closely with the reference while preserving the intended meaning.

2. Context Recall

For the following metric the reference implicitly addresses every element that the RAG pipeline must surface in the retrieved_context. Aligning retrieval with the reference provides a concrete measurement for the Context Recall metric.

If any item is missing, the Context Recall score drops proportionally, indicating a gap in the index or embedding strategy.

Context Recall – Classification Prompt

Given a context, and an answer, analyze each sentence in the answer and classify if the sentence can be attributed to the given context or not. Use only 'Yes' (1) or 'No' (0) as a binary classification. Output json with reason.

Please return the output in a JSON format that complies with the following schema as specified in JSON Schema:

{"$defs": {"ContextRecallClassification": {"properties": {"statement": {"title": "Statement", "type": "string"}, "reason": {"title": "Reason", "type": "string"}, "attributed": {"title": "Attributed", "type": "integer"}}, "required": ["statement", "reason", "attributed"], "title": "ContextRecallClassification", "type": "object"}}, "properties": {"classifications": {"items": {"$ref": "#/$defs/ContextRecallClassification"}, "title": "Classifications", "type": "array"}}, "required": ["classifications"], "title": "ContextRecallClassifications", "type": "object"}Do not use single quotes in your response but double quotes,properly escaped with a backslash.

--------EXAMPLES-----------

Example 1

Input: {

"question": "What can you tell me about albert Albert Einstein?",

"context": "Albert Einstein (14 March 1879 - 18 April 1955) was a German-born theoretical physicist, widely held to be one of the greatest and most influential scientists of all time. Best known for developing the theory of relativity, he also made important contributions to quantum mechanics, and was thus a central figure in the revolutionary reshaping of the scientific understanding of nature that modern physics accomplished in the first decades of the twentieth century. His mass-energy equivalence formula E = mc2, which arises from relativity theory, has been called 'the world's most famous equation'. He received the 1921 Nobel Prize in Physics 'for his services to theoretical physics, and especially for his discovery of the law of the photoelectric effect', a pivotal step in the development of quantum theory. His work is also known for its influence on the philosophy of science. In a 1999 poll of 130 leading physicists worldwide by the British journal Physics World, Einstein was ranked the greatest physicist of all time. His intellectual achievements and originality have made Einstein synonymous with genius.",

"answer": "Albert Einstein born in 14 March 1879 was German-born theoretical physicist, widely held to be one of the greatest and most influential scientists of all time. He received the 1921 Nobel Prize in Physics for his services to theoretical physics. He published 4 papers in 1905. Einstein moved to Switzerland in 1895"

}

Output: {

"classifications": [

{

"statement": "Albert Einstein, born on 14 March 1879, was a German-born theoretical physicist, widely held to be one of the greatest and most influential scientists of all time.",

"reason": "The date of birth of Einstein is mentioned clearly in the context.",

"attributed": 1

},

{

"statement": "He received the 1921 Nobel Prize in Physics for his services to theoretical physics.",

"reason": "The exact sentence is present in the given context.",

"attributed": 1

},

{

"statement": "He published 4 papers in 1905.",

"reason": "There is no mention about papers he wrote in the given context.",

"attributed": 0

},

{

"statement": "Einstein moved to Switzerland in 1895.",

"reason": "There is no supporting evidence for this in the given context.",

"attributed": 0

}

]

}

-----------------------------

Now perform the same with the following input

input: {

"question": "Which protocols are supported for fetching emails?",

"context": "---\ndescription: Learn about the emailing functionalities in ConSol CM\nslug: Email\n--- # Emailing\nSending and receiving emails is one of the core functionalities of ***ConSol CM***. ...",

"answer": "ConSol CM supports the following protocols for fetching emails: IMAP, IMAPs, POP3, and POP3s.\n"

}

Output:

The evaluation LLM sees one statement covered by the retrieved_context.

Context Recall – Classification Output

[

{

"statement": "ConSol CM supports the following protocols for fetching emails: IMAP, IMAPs, POP3, and POP3s.",

"reason": "The supported protocols for fetching emails are explicitly mentioned in the context.",

"attributed": 1

}

]

The resulting score is 1.00.

3. Answer Relevancy

To assess answer relevancy, we first generate a set of probing questions from the model’s response. Each question is embedded and its cosine similarity with the original user_input is computed. We compute the mean of these similarity scores. If any question lacks a committed answer, the score defaults to zero. This ensures that relevance is measured only when the response fully addresses the inquiry space.

Answer Relevancy – Question Generation Prompt

Generate a question for the given answer and Identify if answer is noncommittal. Give noncommittal as 1 if the answer is noncommittal and 0 if the answer is committal. A noncommittal answer is one that is evasive, vague, or ambiguous. For example, "I don't know" or "I'm not sure" are noncommittal answers

Please return the output in a JSON format that complies with the following schema as specified in JSON Schema:

{"properties": {"question": {"title": "Question", "type": "string"}, "noncommittal": {"title": "Noncommittal", "type": "integer"}}, "required": ["question", "noncommittal"], "title": "ResponseRelevanceOutput", "type": "object"}Do not use single quotes in your response but double quotes,properly escaped with a backslash.

--------EXAMPLES-----------

Example 1

Input: {

"response": "Albert Einstein was born in Germany."

}

Output: {

"question": "Where was Albert Einstein born?",

"noncommittal": 0

}

Example 2

Input: {

"response": "I don't know about the groundbreaking feature of the smartphone invented in 2023 as am unaware of information beyond 2022. "

}

Output: {

"question": "What was the groundbreaking feature of the smartphone invented in 2023?",

"noncommittal": 1

}

-----------------------------

Now perform the same with the following input

input: {

"response": "ConSol CM can retrieve mail using the standard mailbox protocols:\n\n- **IMAP** (and the secure variant **IMAPS**) \n- **POP3** (and the secure variant **POP3S**)\n\nThese four protocols are the ones supported for fetching emails 【<link removed>】."

}

Output:

The RAG’s generator LLM here clearly states, that those protocols are supported, so it should be committal.

Answer Relevancy – Question Generation Output

[

{

"question": "What mailbox protocols can ConSol CM use to retrieve mail?",

"noncommittal": 0

}

]

This yields a cosine similarity score of 0.64, a reasonable result given the variations. While the two queries are closely related, they differ in scope: one references ConSol CM explicitly, whereas the other remains generic, which can affect the similarity metric.

user_input: Which protocols are supported for fetching emails?generated question: What mailbox protocols can ConSol CM use to retrieve mail?

4. Faithfulness

Faithfulness quantifies the factual alignment of a generated answer with the retrieved_context. The response is deemed faithful when every claim it makes can be directly supported by the retrieved data. The metric is computed by extracting all claims, verifying each against the context.

Faithfulness – Prompt

Your task is to judge the faithfulness of a series of statements based on a given context. For each statement you must return verdict as 1 if the statement can be directly inferred based on the context or 0 if the statement can not be directly inferred based on the context.

Please return the output in a JSON format that complies with the following schema as specified in JSON Schema:

{"$defs": {"StatementFaithfulnessAnswer": {"properties": {"statement": {"description": "the original statement, word-by-word", "title": "Statement", "type": "string"}, "reason": {"description": "the reason of the verdict", "title": "Reason", "type": "string"}, "verdict": {"description": "the verdict(0/1) of the faithfulness.", "title": "Verdict", "type": "integer"}}, "required": ["statement", "reason", "verdict"], "title": "StatementFaithfulnessAnswer", "type": "object"}}, "properties": {"statements": {"items": {"$ref": "#/$defs/StatementFaithfulnessAnswer"}, "title": "Statements", "type": "array"}}, "required": ["statements"], "title": "NLIStatementOutput", "type": "object"}Do not use single quotes in your response but double quotes,properly escaped with a backslash.

--------EXAMPLES-----------

Example 1

Input: {

"context": "John is a student at XYZ University. He is pursuing a degree in Computer Science. He is enrolled in several courses this semester, including Data Structures, Algorithms, and Database Management. John is a diligent student and spends a significant amount of time studying and completing assignments. He often stays late in the library to work on his projects.",

"statements": [

"John is majoring in Biology.",

"John is taking a course on Artificial Intelligence.",

"John is a dedicated student.",

"John has a part-time job."

]

}

Output: {

"statements": [

{

"statement": "John is majoring in Biology.",

"reason": "John's major is explicitly mentioned as Computer Science. There is no information suggesting he is majoring in Biology.",

"verdict": 0

},

{

"statement": "John is taking a course on Artificial Intelligence.",

"reason": "The context mentions the courses John is currently enrolled in, and Artificial Intelligence is not mentioned. Therefore, it cannot be deduced that John is taking a course on AI.",

"verdict": 0

},

{

"statement": "John is a dedicated student.",

"reason": "The context states that he spends a significant amount of time studying and completing assignments. Additionally, it mentions that he often stays late in the library to work on his projects, which implies dedication.",

"verdict": 1

},

{

"statement": "John has a part-time job.",

"reason": "There is no information given in the context about John having a part-time job.",

"verdict": 0

}

]

}

Example 2

Input: {

"context": "Photosynthesis is a process used by plants, algae, and certain bacteria to convert light energy into chemical energy.",

"statements": [

"Albert Einstein was a genius."

]

}

Output: {

"statements": [

{

"statement": "Albert Einstein was a genius.",

"reason": "The context and statement are unrelated",

"verdict": 0

}

]

}

-----------------------------

Now perform the same with the following input

input: {

"context": "---\ndescription: Learn about the emailing functionalities in ConSol CM\nslug: Email\n--- # Emailing\nSending and receiving emails is one of the core functionalities of ***ConSol CM***. ...",

"statements": [

"ConSol CM can retrieve mail using standard mailbox protocols.",

"The supported protocols for fetching emails include IMAP and the secure variant IMAPS.",

"The supported protocols for fetching emails also include POP3 and the secure variant POP3S.",

"These four protocols are the ones supported for fetching emails."

]

}

Output:

All 4 statements are covered by the context.

Answer Relevancy – Question Generation Prompt

{

"statements": [

{

"statement": "ConSol CM can retrieve mail using standard mailbox protocols.",

"reason": "The context explicitly states that ConSol CM interacts with mail servers to fetch emails, indicating it can retrieve mail using standard protocols.",

"verdict": 1

},

{

"statement": "The supported protocols for fetching emails include IMAP and the secure variant IMAPS.",

"reason": "The context mentions that ConSol CM can retrieve emails using IMAP and IMAPS, confirming that these protocols are supported.",

"verdict": 1

},

{

"statement": "The supported protocols for fetching emails also include POP3 and the secure variant POP3S.",

"reason": "The context states that ConSol CM can retrieve emails using POP3 and POP3s, confirming that these protocols are also supported.",

"verdict": 1

},

{

"statement": "These four protocols are the ones supported for fetching emails.",

"reason": "The context lists IMAP, IMAPS, POP3, and POP3s as the supported protocols for fetching emails, confirming that these four are indeed the supported protocols.",

"verdict": 1

}

]

}

This yields another highscore of 1.00.

Overall Score Summary

We calculate the overall RAG Evaluation score as a weighted mean that reflects our research‑driven priorities:

- Answer correctness – 50 % weight

- Context recall – 25 % weight

- Answer relevancy – 12.5 % weight

- Faithfulness – 12.5 % weight

This weighting acknowledges that a poor answer relevance or faithfulness is less damaging than an incorrect answer. While context recall is a solid indicator of a functional retrieval component in a RAG pipeline, we treat it as half important as answer correctness in the final calculation.

Applying the formula to the metrics (answer_correctness = 0.98, context_recall = 1.0, answer_relevancy = 0.64, faithfulness = 1.0) yields an overall score of 0.94. Our manual review confirms that this value is well‑deserved.

Unhealthy RAG Pipeline – indicators

The following examples show how our RAG evaluation metrics expose performance regressions, pinpointing the exact stage where the RAG pipeline fails. Detecting these breaches early secures the transition between changes and lets us remediate the offending component before it propagates.

Retrieval fails

For example if the model embedding is changed without re‑embedding the documents, the similarity search will break. Let’s see what may happen to the scores then.

response: I’m sorry, but I don’t have that information in the provided documentation.retrieved_context: like … everything, but no relevant information.

Resulting scores

Overall score: 0.03Answer Correctness: 0.06Context Recall: 0Answer Relevancy: 0Faithfulness: 0

Overall score: 0.03 — a clear red flag. The context_recall is 0, meaning no relevant documents are being retrieved. The answer_correctness hovers at 0.06, indicating only a minimal semantic similarity effect. Both answer_relevancy and faithfulness sit at 0, confirming that the pipeline fails to deliver useful and trustworthy responses, which is correct for the scenario.

Weak Generation LLM

Resources are limited, so while for RAG we don’t need top-notch LLM quality, at least it should respect the instructions and do the task. Smaller LLMs or SLMs tend to talk too much or don’t understand from the context. For this example we took a small Llama3.2-3B and even though the response is not too bad considering it’s size. But of course it’s worse in contrast to a higher quality LLM.

response: The following protocols are supported for fetching emails:* POP3 or POP3S

* IMAP or IMAPSThese protocols can be used to retrieve emails from one or several mailboxes. One mailbox can have several email addresses.

For more information, please refer to the [Email configuration](<link removed>) documentation.

Resulting scores

Overall score: 0.66Answer Correctness: 0.36Context Recall: 1Answer Relevancy: 1Faithfulness: 1

The metrics show strong retrieval performance (context recall = 1) and high answer relevance. Moreover, the perfect faithfulness score suggests the LLM is not hallucinating. However, the answer correctness of ~ 36 % indicates that the overall accuracy still leaves room for improvement giving an overall score of 0.66, which also indicates a regression.

Rephrasing problems and a hallucinating Generation LLM

Given the previous example using Llama3.2-3B as the generation LLM, we now deliberately increase the temperature to provoke hallucinations.

Before embedding the user request and executing the similarity search, we canonicalize the query to eliminate variance and reduce noise. The LLM here returns the original query while also injecting additional noise which will likely have an impact to the similarity search result.

- Rephrase From: Which protocols are supported for fetching emails?

- Rephrase To: The conversation history indicates that a rewritten query is not necessary, so the original query remains the same:

Which protocols are supported for fetching emails?

response: ConSol CM can retrieve emails from real mailboxes only, and it supports the following protocols for fetching emails: IMAP (Internet Message Access Protocol) and IMAPS.Please see [Configuration](<link removed>) for more information.

(Note: POP3 protocol is not supported, email processing will delete the email after reading).

*To clarify*: Can you provide any additional context or details about which protocol you are trying to use? Are there any specific issues or error messages you’re experiencing while trying to fetch emails using a particular protocol?

retrieved_context: something about the mail setup, but the relevant chunks are missing.

Resulting scores

Overall score: 0.38Answer Correctness: 0.47Context Recall: 0Answer Relevancy: 0.73Faithfulness: 0.43

The run supplied a few mail‑related snippets but omitted any reference to the underlying protocols, resulting in a Context Recall of zero. Despite this missing foundation, the model still achieved a modest Answer Correctness score of 0.47, which indicates that it fabricated details—effectively hallucinating—rather than adhering to the strict “context‑only” guideline. This risk shows that the model can generate plausible answers even when the source material lacks the required information, underscoring the need for tighter contract enforcement in RAG pipelines and for using a higher‑quality LLM.

How can I get my own RAG?

RAG pipelines don’t require a megamodel from a hyperscaler. A 20B‑parameter LLM already delivers solid foundation when paired with a robust retrieval layer. The higher the model quality, the closer the response aligns with the intended behavior – but retrieval remains the decisive factor.

Because local RAG is affordable, small and midsize enterprises can retain 100 % data sovereignty. By deploying the pipeline on‑premise or within a EU‑compliant cloud and integrating it with cRAiG, you obtain an OpenAI‑compatible API that respects strict privacy contracts while exposing the full power of your knowledge base.

For teams that want both GPU control and a battle‑tested workload platform, we recommend pairing cRAiG with Red Hat OpenShift. Our consulting services can provision a secure OpenShift cluster, configure vLLM for high‑throughput inference, and hook the stack into your CI/CD pipeline – all under your own data‑guardrails. Regarding the OpenShift vLLM setup you can read some insights the blog post about our local GPT-OSS inference on OpenShift.