gRPC is a modern open source high performance Remote Procedure Call (RPC) framework. Although gRPC is still more considered bleeding-edge it seems to be a good successor for ReST as it has lot’s of improvements and it addresses performance for latency and serialization as well as a more straight way of designing APIs.

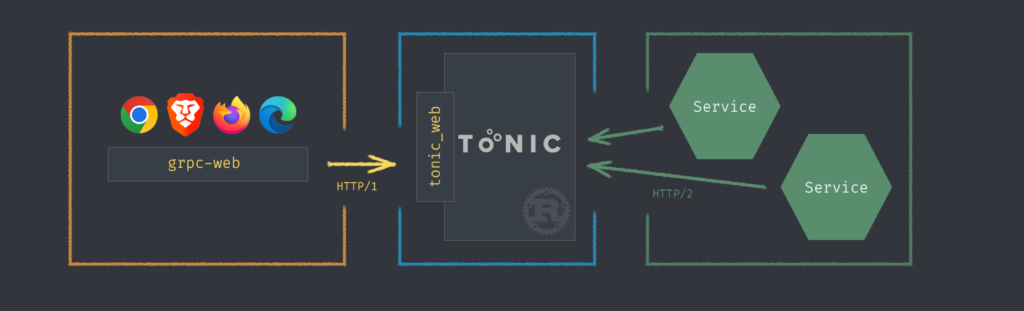

The main two building blocks of the specification are services and messages which can be defined with Protocol Buffers, a binary serialization toolset. Those .proto files can be used to generate server stubs and clients. As gRPC is not necessarily based on HTTP, there is also a standard for HTTP/1 that servers only have to implement the gRPC services once and add a bridge component, that transforms HTTP/1 into generic gRPC requests, so that also browsers can talk to gRPC servers. The drawbacks are that some advantages are levered out, but at least we only have to implement it once and for backend to backend communication we can use native gRPC for example over HTTP/2.

About the stack

Rust was chosen for the backend and SolidJS was chosen for the browser frontend as both stand for modern technology and performance. The project also proves that a very fast development cycle is possible and full-stack development needn’t be slow.

Rust Backend

Tonic is a Rust implementation of gRPC based on HTTP/2. For code generation prost is used. A very simple pet shop should be implemented with some authorization and shop service.

gRPC protobuf definitions

The following two protobuf definitions are the API contract that is used to implement the backend.

proto/auth.v1.proto

syntax = "proto3";

package auth.v1;

service Authentication {

rpc Login (LoginRequest) returns (LoginReply);

rpc Logout (LogoutRequest) returns (LogoutReply);

}

message LoginRequest {

string email = 1;

string password = 2;

}

message LoginReply {

string usertoken = 1;

}

message LogoutRequest {}

message LogoutReply {}

proto/shop.v1.proto

syntax = "proto3";

package shop.v1;

service PetShop {

rpc GetPets (GetPetsRequest) returns (GetPetsReply);

rpc BuyPet (BuyPetRequest) returns (BuyPetReply);

}

message GetPetsRequest {}

message GetPetsReply {

repeated Pet pets = 1;

}

message Pet {

int64 id = 1;

int32 age = 2;

string name = 3;

}

message BuyPetRequest {

int64 id = 1;

}

message BuyPetReply {}

Even if you’ve never read protobuf definitions before, it’s possible to understand what the contract does. Most of it is easy to read and write, but things like repeated for arrays and = 1, = 2 field numbers takes some getting used to it.

prost code generation

Before the Rust compiler builds everything it’s possible to put a build.rs file to the root which is run before. There we will generate all the necessary code and structs that are needed for our server implementation.

build.rs

fn main() {

tonic_build::configure()

.compile(&["proto/auth.v1.proto", "proto/shop.v1.proto"], &["proto"])

.expect("compile gRPC proto files.");

}

In order to include the generated code, we use a macro that will embed the files and we can then access everything via the grpc module.

src/api.rs

/// contains generated gRPC code

mod grpc {

tonic::include_proto!("auth.v1");

tonic::include_proto!("shop.v1");

}

Stub implementation

For every gRPC service a Rust trait has to be implemented which contains all rpc calls from the protobuf definition. Every fn is async and therefore async_trait is used. For more details about why async fn ..() {} is not possible in traits read the docs. In short it’s currently not possible in Rust to define an object-safe trait which infers the return type from the implementation, therefore the async_trait macro transforms the code into non-async functions which return a boxed future and using the macro is just a convenient way to get rid of boilerplate code.

src/api.rs

#[derive(Clone)]

pub struct AuthenticationService;

#[async_trait]

impl Authentication for AuthenticationService {

async fn login(&self, request: Request<LoginRequest>) -> Result<Response<LoginReply>> {

let request = request.into_inner();

if request.email == "user@email.com" && request.password == "password" {

Ok(Response::new(LoginReply {

usertoken: TOKEN.to_owned(),

}))

} else {

Err(Status::unauthenticated("invalid credentials"))

}

}

async fn logout(&self, request: Request<LogoutRequest>) -> Result<Response<LogoutReply>> {

check_auth_meta(&request)?;

Ok(Response::new(LogoutReply {}))

}

}

#[derive(Clone)]

pub struct ShopService {

db: PetDb,

}

#[async_trait]

impl PetShop for ShopService {

async fn get_pets(&self, request: Request<GetPetsRequest>) -> Result<Response<GetPetsReply>> {

check_auth_meta(&request)?;

let data = self.db.data.lock().await;

Ok(Response::new(GetPetsReply {

pets: data

.iter()

.map(|pet| Pet {

id: pet.id,

age: pet.age,

name: pet.name.clone(),

})

.collect(),

}))

}

async fn buy_pet(&self, request: Request<BuyPetRequest>) -> Result<Response<BuyPetReply>> {

check_auth_meta(&request)?;

let mut data = self.db.data.lock().await;

data.retain(|pet| pet.id != request.get_ref().id);

Ok(Response::new(BuyPetReply {}))

}

}

Notice that the implementation doesn’t take &mut self, but &self. That means mutating data like in our example our “database” is a Vec<Pet> has to be synchronized with some lock. The setup creates every service twice. Each PetShop owns a PetDb which contains a shared reference to the data behind a mutex.

src/main.rs

#[derive(Clone)]

pub struct PetDb {

data: Arc<Mutex<Vec<Pet>>>,

}

#[derive(Clone)]

struct Pet {

id: i64,

age: i32,

name: String,

}

Therefore accessing the data and get mut access is done with self.db.data.lock().await. check_auth_meta is a guard checking whether the meta data contains the correct token.

/// checks whether request has correct auth meta set

fn check_auth_meta<T>(request: &Request<T>) -> Result<()> {

let meta = request.metadata();

if let Some(authentication) = meta.get(AUTH_META) {

if authentication == format!("Bearer {TOKEN}").as_str() {

Ok(())

} else {

Err(Status::unauthenticated("bad authorization token"))

}

} else {

Err(Status::unauthenticated("not authorization meta given"))

}

}

Entry point

Finally we need to start the servers by creating a tokio async runtime.

src/main.rs

#[tokio::main]

async fn main() -> Result<()> {

run().await

}

And we need to configure both servers with the services. Take care about CORS for the HTTP/1 endpoints as a browser client is not allowed to access it like in this sample project the client origin is localhost:3000 while the server is localhost:8081.

src/main.rs

/// macro free entry-point running the server

async fn run() -> Result<()> {

let db = create_pet_db();

// gRPC server on `:8080`

let grpc_server = Server::builder()

.add_service(api::auth())

.add_service(api::shop(db.clone()))

.serve(

"127.0.0.1:8080"

.parse()

.expect("valid address can be parsed"),

);

// http-gRPC bridge server on `:8081`.

// Browser cannot use real gRPC because it's not based on HTTP.

let web_server = Server::builder()

.accept_http1(true)

// because client and server doesn't have the same origin (different port):

// 1. server has to allow origins, headers and methods explicitely

// 2. server has to allow the client to read specific gRPC response headers.

.layer(

CorsLayer::new()

.allow_origin(Any)

.allow_headers(Any)

.allow_methods(Any)

.expose_headers([

HeaderName::from_static("grpc-status"),

HeaderName::from_static("grpc-message"),

]),

)

.layer(GrpcWebLayer::new())

.add_service(api::auth())

.add_service(api::shop(db))

.serve(

"127.0.0.1:8081"

.parse()

.expect("valid address can be parsed"),

);

// run both servers

try_join!(grpc_server, web_server)?;

Ok(())

}

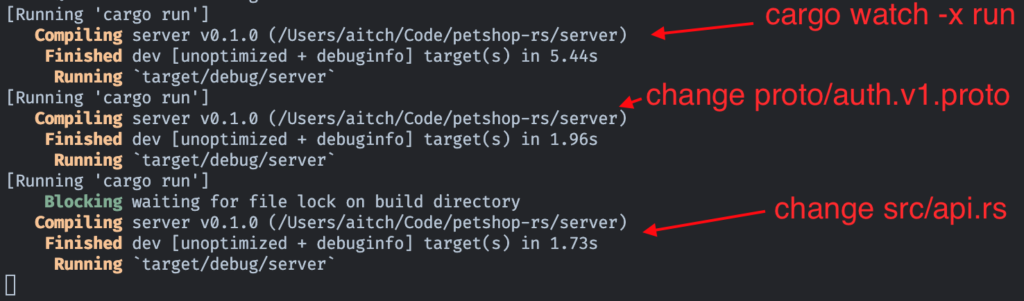

Running the server

In order to reload the server with every code change, we use cargo-watch via cargo watch -x run which compiles and reload the server in a few seconds. That’s happening for the code changes, as well as for the .proto file changes.

SolidJS frontend

As one of the big three, React is still a good choice for frontends. On the one hand side React is advertising for its predictable results, but on the other side performance heavily depends on “smart micro caching” components and not breaking the “dependency chain” and it’s almost irresponsible to not use a linter that checks the dependency lists of hooks, because it’s very easy to do mistakes.

All in all SolidJS is reactive React and a few convenient building blocks we all missed in React. It uses JSX/TSX as representation, but it doesn’t use a virtual DOM indirection. All components render once and then change via reactive updates, which makes it blazing fast, as only a few parts of the DOM needs to be updated.

protoc code generation

protoc is the protobuf definition compiler that parses .proto files. It can be used with plugins that generates code like protoc-gen-ts_proto. That’s how we generate TypeScript files with the client implementations and message interface definition.

scripts/protogen.sh

protoc \ --plugin=./node_modules/.bin/protoc-gen-ts_proto \ --ts_proto_out=./src/generated/proto \ --ts_proto_opt=esModuleInterop=true \ --ts_proto_opt=outputClientImpl=grpc-web \ -I ../server/proto \ ../server/proto/auth.v1.proto \ ../server/proto/shop.v1.proto

We don’t need to implement anything for the client as each file contains an implemented client.

src/generated/proto/shop.v1.ts

export interface PetShop {

GetPets(request: DeepPartial<GetPetsRequest>, metadata?: grpc.Metadata): Promise<GetPetsReply>;

BuyPet(request: DeepPartial<BuyPetRequest>, metadata?: grpc.Metadata): Promise<BuyPetReply>;

}

export class PetShopClientImpl implements PetShop {

API wrapper

In order to hide gRPC details and be able to do central logging, we wrap each service. The auth.v1 API uses an optional usertoken.

src/api.ts

export class AuthV1Api {

private rpc: GrpcWebImpl

private client: AuthenticationClientImpl

constructor(usertoken?: string) {

let metadata: grpc.Metadata | undefined = undefined

if (usertoken !== undefined) {

metadata = new grpc.Metadata()

metadata.append('authentication', `Bearer ${usertoken}`)

}

this.rpc = new GrpcWebImpl(HOST, { metadata })

this.client = new AuthenticationClientImpl(this.rpc)

}

async login(email: string, password: string) {

const { usertoken } = await this.client.Login({ email, password })

return { usertoken }

}

async logout() {

await this.client.Logout({})

}

}

The shop.v1 API needs a mandatory usertoken and it’s not possible to use the API without it.

src/api.ts

export class ShopV1Api {

private rpc: GrpcWebImpl

private client: PetShopClientImpl

constructor(usertoken: string) {

const metadata = new grpc.Metadata()

metadata.append('authentication', `Bearer ${usertoken}`)

this.rpc = new GrpcWebImpl(HOST, { metadata })

this.client = new PetShopClientImpl(this.rpc)

}

async getPets(): Promise<Pet[]> {

const { pets } = await this.client.GetPets({})

return pets

}

async buyPet(id: number) {

await this.client.BuyPet({ id })

}

}

Using the client

With SolidJS we can nicely use the concept of “resources”.

src/PetList.tsx

export default function PetList() {

const [pets, { refetch }] = createResource<Pet[] | undefined>(

async (k, info) => {

return await shopApi()?.getPets()

}

)

function buyPet(id: number) {

shopApi()

?.buyPet(id)

.then(() => {

refetch()

})

.catch((e) => {

alert('buy pet failed')

})

}

return (

<div>

<h3>PetList</h3>

<Show when={pets()} fallback={<p>fetching pets</p>} keyed>

{(p) => (

<For each={p} fallback={<p>all pets are sold</p>}>

{(item) => {

return (

<article>

<h4>{item.name}</h4>

<p>{item.age}yrs</p>

<button onClick={() => buyPet(item.id)}>Buy</button>

</article>

)

}}

</For>

)}

</Show>

</div>

)

}

Running the client

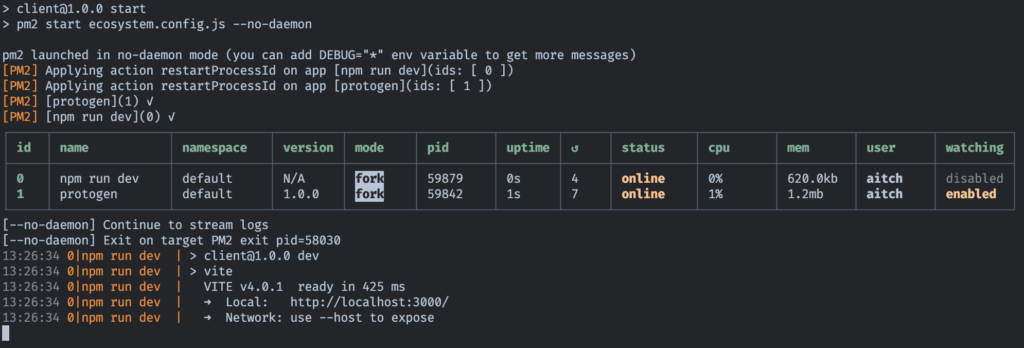

We have the client which should be hot-reloaded and the .proto files which should trigger protoc to generate new clients. The SolidJS framework is using vite which already supports hot-reloading. For the other part we need a bash script to be called. A combination of nodemon and forever would allow this, but forever is somehow more in maintenance mode and pm2 should be preferred, which also supports file watching and triggering scripts.

Therefore npm start will trigger pm2 start ecosystem.config.js --no-daemon which runs given configuration without a daemon. Typically pm2 is a production ready process manager for node.js that can manage Node processes via CLI. We only want it to start npm run dev and run the scripts for code generation, so we use pm2 isolated, without a daemon.

ecosystem.config.js

module.exports = {

apps: [

{

script: 'npm run dev',

autorestart: false,

shutdown_with_message: true,

},

{

script: './scripts/protogen.sh',

watch: '../server/proto',

},

],

}

Starting with npm start will run both scripts and merge logs to your command line.

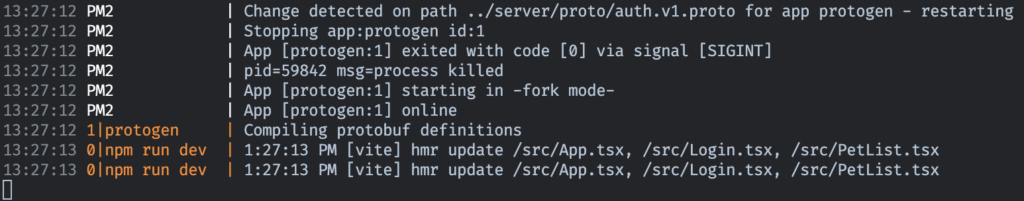

npm startWhen we change a .proto file we will immediately get our clients generated and code built.

When we change any source file the code is also built.

Project in numbers

- development cycle builds add ~3s for Rust compile, while frontend is finished after ~1s

- lines of code to add per

rpcservice call in the Rust backend is just atrait fnimplementation with a few lines boilerplate - Rust backend binary size is 4MB and memory is ~3MB after warmup

- SolidJS frontend + gRPC client packaged code is ~90kB

Conclusion

- using a gRPC contract-first approach speeds up full-stack development as well as high performance communication especially in micro-service environments is a huge advantage

- Rust plays well with static meta programming like generating code and compile-time optimizations for the gRPC server implementation which has a positive impact to the binary and memory size

- SolidJS has everything and more to gain high reputation in the community

Thanks for reading. As always feedback is welcome. Find the repository on GitHub.