Introduction

Webservice technologies have come a long way since their inception, and the demand for high-performance and scalable webservices has only increased over time. In recent years, there has been a growing trend towards the use of natively compiled webservice technologies, which offer significant performance improvements over their interpreted counterparts. In this blog post, we will explore three popular natively compiled webservice technologies: Quarkus-Native, Spring-Native, and Rust (Actix-Web) and compare them against each other regarding some key performance indicators like startup time, response times and memory footprint.

Quarkus-Native: Quarkus is an open-source, Kubernetes-native Java framework designed to improve developer productivity and minimize the footprint of Java applications in production. Quarkus-Native is a subset of Quarkus that allows developers to compile their applications into a native binary, which can provide significant performance improvements over a traditional Java Virtual Machine (JVM) based application.

Spring-Native: Spring-Native is a project that aims to bring native compilation support to the popular Spring framework. Like Quarkus-Native, Spring-Native uses the GraalVM to compile Spring applications down to a native binary, allowing them to start up and run faster than a traditional JVM-based application. One of the key benefits of using Spring-Native is that it allows developers to take advantage of the familiar Spring framework while still gaining the performance benefits of native compilation. Spring is a well-established and widely used framework for building Java applications, and with Spring-Native, developers can continue to use the Spring framework while benefiting from the performance improvements of native compilation. In our case we used Spring-Native with the Spring-Booot developement platform.

Rust: Rust is a statically-typed, multi-paradigm programming language that is designed for safety, concurrency, and performance. One of the key advantages of using Rust for webservice development is its strong focus on safety and concurrency. Rust uses a unique ownership model that ensures that memory is always properly managed and that there are no dangling pointers or null reference errors. This makes Rust a great choice for building high-performance, concurrent applications that need to be reliable and scalable. For Rust, we used Actix-Web to provide REST-Endpoints.

Description of the Application Usecase

For each technology, we implemented simple wallet applications that provide REST endpoints to create accounts and send money from one account to another (transactions) to generate workload for load tests. The following entities are involved:

We are using a Postgres database running in Docker, and performing JWT token validation with encrypted tokens (HS256) to authenticate each REST request, which of course generates more workload in the requests.

Test-Setup with Gatling

Gatling is an open-source load testing framework for web applications. It is designed to be easy to use and provide high performance, with the ability to simulate thousands of concurrent users making requests to a server. One of the key features of Gatling is the ability to specify the workload that will be simulated during a load test to best fit your scenario and purpose of the test.

Gatling also provides a number of reporting and visualization tools to help users understand the results of their load tests. Gatling generates detailed reports that include information about the performance of each request, including response times, error rates, and other metrics. Gatling also provides a number of built-in graphs and charts that can be used to visualize the results of a load test, such as response time distributions and request rate over time. We are using these reportings to extract the information about the performance that we need in our results.

“We decided to use the workload profile ‘constantConcurrentUsers’ which is a type of injection profile that defines a constant number of concurrent users to be simulated during a load test. This injection profile allows specifying a fixed number of users that will be active at any given point in time during the test. We chose this profile as we wanted to measure performance and stability under a constant (heavy) load for an extended period of time, which can be compared to each other. The following sequence illustrates the test execution with Gatling:”

We are using two stress levels as defined below to compare the performance under low and high load:

| Level | concurrent requests maintened by Gatling |

| LOW | 2 |

| HIGH | 250 |

Results

Metrics

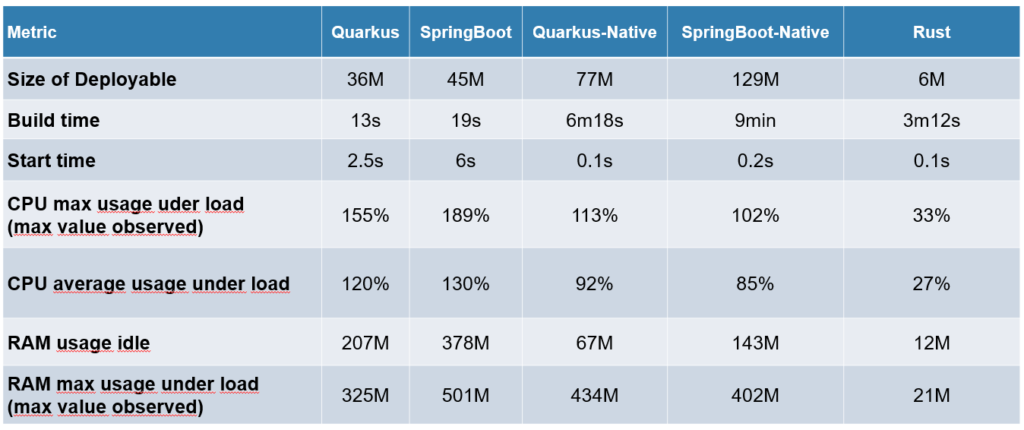

The table below gives an overview of metrics taken from the application system during high load. The values for maximum CPU usage and RAM usage under load were manually observed using htop. The average CPU usage under load was measured using the GNU version of the Unix time tool.

What is noticeable when looking at the size of the deployable is that for both Quarkus and Spring, the GraalVM native images are considerably larger than the jar versions. This is because the JVM functionality has to be bundled inside the native binaries. Of course, the Rust variant has none of this and is by far the smallest with a size of only 5.8 Mebibytes.

Not surprisingly, the build times of the jar variants are a lot faster than their native counterparts, since they only need to compile Java to Java bytecode and do not need to produce an x86 binary. It is important to note that the Rust build only takes that long when using Cargo’s release profile. During development, the debug profile would reduce this amount of time to only a couple of seconds. This is because in this profile, Cargo does not optimize the binary and can make use of an incremental build process, where parts of the artifact can be reused from the last build. However, there does not seem to be a way to shorten the build time for the GraalVM native images. This can be an impediment to the development process, especially in the case of Spring Boot.

As expected, the start times of the web-services are significantly shorter when using a native binary because the program itself is not loaded by a virtual machine.

The native variants use less CPU power when looking at both maximum and average values. Here, Quarkus-Native uses 28% less CPU in the maximum when compared to the JVM version. With Spring, 46% are saved when switching to the native image. If looking at average CPU consumption under load, the differences are a bit less extreme: Quarkus-Native uses 23% and Spring-Native 35% less average CPU time compared to their non-native counterparts.

Also striking are the differences between the native variants: Rust uses less than a third of the CPU power of both Quarkus-Native and Spring-Native. This is the case for both maximum CPU and average CPU.

The differences when looking at RAM usage are interesting as well: As expected, the native versions far outperform the non-native variants when the application is idling. Not surprisingly, Rust is leading the pack here with only 12 MB. This may look better for Quarkus-Native and Spring-Boot-Native than it actually should. Bear in mind that the RAM usage of the non-native variants also includes the allocated heap of the JVM. This is in line with the following observation of RAM usage under load. The biggest value observed is similar for Quarkus and Spring-Boot, looking at both native and non-native versions. Actually, Quarkus in JVM mode has the best value here, with 325 MB. This is in line with our expectation: The above versions use the same Java memory management, therefore their memory consumption should behave similarly. Rust performs better again; the maximum RAM usage under load is only 21 MB. This is surprising, as one would think that maximum RAM usage is dictated foremost by use-case logic, but this does not seem to be the case here.

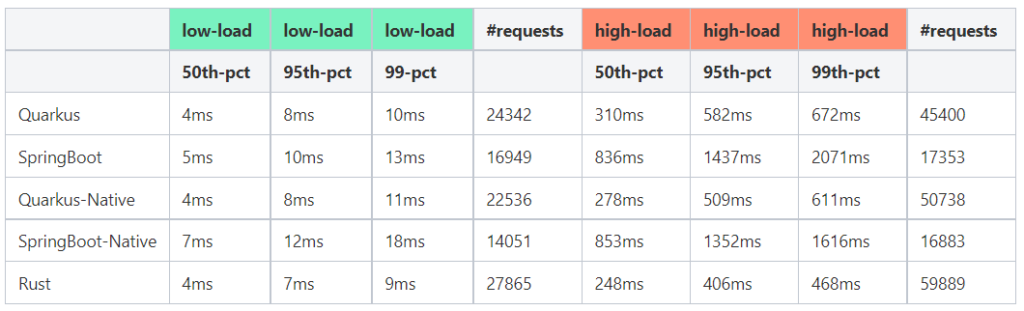

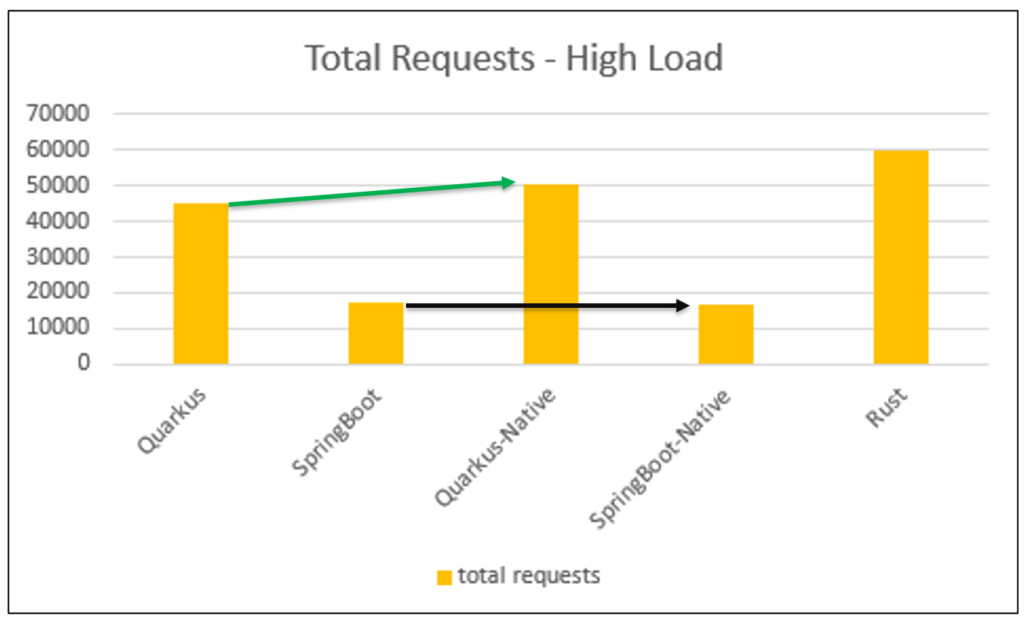

Response Times

Most importantly, we will discuss the response times of our applications. We ran every version in two test scenarios, one with low and one with high load. The high-load test was run with 250 concurrent requests at any given time, the low with two concurrent requests. In the high-load test, Actix-Web came first with 59,889 processed requests in one minute. That is 18% more than Quarkus-Native at second place with 50,738 requests. The third place is held by Quarkus in JVM mode with 45,400 requests. Spring-Boot-JVM and Spring-Boot-Native score at 17,353 and 16,883 requests, respectively, which is only 38% and 37% percent of the Quarkus performance.

Conclusion

In the following there are a few conclusive remarks on our particular project. They do not conclude the discussions surrounding the involved technologies and their proper use. To gain more definitive insights on these topics, we recommend conducting further research using more intricate usecases.

In conclusion, there are a few observations on our particular project. They do not conclude the discussions surrounding the involved technologies and their proper use. To gain more definitive insights on these topics, we recommend conducting further research using more complex use cases.

Setting aside Rust for a moment, Quarkus performed much better than Spring-Boot in terms of throughput. And Rust performed slightly better than Quarkus-Native.

When developing an application with either Quarkus-Native or Spring-Boot-Native, it is important to regularly build the app in native mode. This will prevent situations where the app builds in normal mode but fails in native mode. In such situations, it can be difficult to find the error in the native build by incrementally rebuilding the app, since every iteration will take at least a couple of minutes. This can be prevented by continuously rebuilding the app in native mode alongside the development process, to spot build errors early on.

We used mostly the standard configurations for the technologies involved in our tests, so there could be some room for improvement. However, we still think that this is a fair comparison, since good default configurations are an important quality for big web frameworks, and they are also advertised as such.

Considering the above point, it is also important to acknowledge that native compilation for Java is still very young and we could see some improvements in the future concerning performance and usability.

It is also important to bear in mind that we don’t know exactly how much our observations rely on the specifics of our use case. For example, it is noted that Quarkus-Native is advertised on the project homepage with a 0.04s boot time for simple CRUD apps, which is much faster than in our tests.

Among other things, we learned from the R&D project that Rust offers a mature and production-ready environment for developing web application backends. The libraries we used with Rust do not offer a highly integrated framework like Quarkus or Spring-Boot, but configuration and setup was not actually easier with the latter technologies. When it comes to maintenance over time, a framework might show beneficial. At last, it remains unclear how much work it is for a Java programmer to get fully accustomed to the Rust world. For example, since our application is almost only a simple CRUD API, we didn’t have to think a lot about memory management. Rust does not have a garbage collector, so the memory safety of programs is enforced by the type system using different kinds of references and so-called lifetime annotations. Because our application is just a simple API that takes values and hands them over to a database, this is mostly trivial. Our code consists of nested functions that hand through the ownership of a given object to the next function invoked within and retain it back once it is finished. If you have to model complex business logic that would require you to hold references to an entity in more than one place, this could become a lot harder. But we think the headway Rust has over its contenders when it comes to resource efficiency is serious enough to look further into Rust as a way to provide web backend APIs.

If you use Spring-Boot, like most Java projects do, and you don’t want to change to a completely different programming language, Quarkus is a good option. With the caution that the application use case in our experiments has its limits, we can say that Quarkus, native or JVM, does offer solid performance benefits over Spring-Boot and the loss in throughput against Rust is only marginal. Spring-Boot is said to have the edge when it comes to functionality provided by the framework, but it remains to be seen how much of this plays out in a real-world project. It is important to bear in mind that Quarkus is still relatively new, so some progress can still be expected here. In the Java world, it is advisable to further explore Quarkus in larger-scale projects and see how it fares.